Based on prevalent blog posts and advocacy organizations claiming benefits for raw milk consumption (ranging from alleviating lactose intolerance to treating cancer) and the fact that milk in general has become a growing staple of the American diet, one would surmise that non-pasteurized or homogenized milk is the biggest thing to hit the kitchen since the sliced bread you might pair with it.

However, in reality, it is estimated that less than 1% of U.S. milk production is consumed raw. 1,6,8 Government agencies, in fact, advise against its consumption.2 Yet despite its supporters, raw milk continues to be a topic of heated debate on news channels and social media.

Outbreaks associated with raw milk consumption are disproportionally more common in a per-pound-consumed basis compared to outbreaks associated with pasteurized milk.6 During the period between 1993 and 2006, 121 outbreaks were traced back to dairy products. From those cases, raw dairy products were responsible for 73 outbreaks, 1,575 ill individuals, 202 hospitalizations, and two deaths. Comparatively, pasteurized products were responsible for 48 outbreaks, 2,842 ill individuals, 37 hospitalizations, and one death. In a per-pound-consumed basis, raw milk products were responsible for at least 100 times more outbreaks, ill persons, hospitalizations and deaths than the pasteurized products.

Advocates of raw milk, who believe that conventional processing used in pasteurized milk (heat treatment, homogenization, etc.) impedes the health benefits stated above, suggest that good practices alone can guarantee a safe supply of raw milk. Certainly, due to the lack of a heat treatment, raw milk safety relies exclusively on proper production, transportation and storage practices. These practices are now part of a well-established set of standard operating procedures that yield high-quality milk. In the United States, the Grade A Pasteurized Milk Ordinance (PMO), published by the Food and Drug Administration, defines minimum sanitary conditions for which milk is acceptable for fluid consumption, and most dairy farms today produce milk with total bacterial counts significantly below the 10,000 colony forming units per milliliter (CFU/ml) minimum standard.7,9 In fact, under excellent management and milking conditions the counts can reach levels below 1,000 CFU/ml.7

Based on these numbers, the United States probably has one of the safest and highest quality milk supplies in the world. However, even under these regulated farming, milking, storing and transporting conditions, human pathogens have often been detected from milking facilities around the country in several independent studies.7 L. monocytogenes has been found in up to 12.6% of milking facilities, and its infection can be fatal. Salmonella spp., which has shown 6.1% prevalence in milking facilities, can produce severe gastrointestinal distress and death. Pathogenic E. coli infection is able to produce permanent kidney damage and death. This pathogen has been found in up to 4% of surveyed dairy farms.

The fact is that milk, as a nutritious and close-to-neutral pH food, is a good medium for the growth of these and other pathogens, and some pathogens (i.e., L. monocytogenes) are capable of growing at the refrigerated temperatures in which milk is stored.5 An additional and extremely important factor is that it only takes a few cells—sometimes as low as 10, according to estimates—to cause an infection.5

Microbial Testing: Not all Tests and Samples are Alike

Some believe that successful microbial testing of raw milk render it as safe to consume. Although testing is a powerful tool for food safety, producers and consumers should know that it is highly dependent on the quality of the sample taken and the information that the particular microbial test can provide.

Coliform testing serves as a good example. Commonly used to assess the level of potential fecal contamination in a sample, this microbial test is performed by using a selective medium that inhibits the growth of many microorganisms and allows the growth of other organisms, namely those found in the intestinal tract of warm-blooded animals like cattle and humans, at a defined temperature.4 Therefore, although the levels of these organisms give an indication of the sanitary conditions in which the milk was produced, there are still many pathogens that will be overlooked by a coliform test, as they will not grow under the conditions described.

In addition, end-product testing for pathogens provides a limited amount of information since very low levels could be present, and the contamination might not be homogeneous. Testing is only valuable as part of an integral food safety strategy that has been validated to reduce the risk of pathogens under the conditions that the milk is produced. Otherwise, tests results have little to no value.

Petrifilm Plate Use on Milk Samples

In recent months, some advocacy organizations have claimed that raw milk is safe to consume after being successfully tested on Petrifilm Plates, a testing technology that has become a staple within the food industry, including dairy processing, for more than 30 years. It has long been recognized as an official method by the AOAC International Official Methods of Analysis.

Petrifilm Plate technology is available for several tests that can assess the quality and safety of milk as well as the environment in which it was produced. However, it is important to understand that any given test provides limited information regarding the type of organisms in the sample. Therefore, multiple indicator tests must be used to more fully characterize the microorganisms present. It is also critical to keep in mind that no test can substitute good production practices and that, statistically speaking, outbreaks associated with raw milk have been much greater than pasteurized milk.

Environmental testing is arguably more important than end-product testing, as the environment is often the source of contamination. A quality and safety program should include a strong environmental sampling component. Environmental samples can be collected using a sponge for large surfaces or swab for hard-to-reach areas. Collected samples can then be “plated” to the aerobic count plate (a 48-hour test) or rapid aerobic count plate (a 24-hour test) to conduct a standard plate count, also known as aerobic plate count, which gives a general indication of the microbial load in a product or environment. The test is conducted in non-selective medium that allows the growth of a wide range of bacteria that can then be visualized and enumerated (counted). Therefore, it provides an initial gauge of the sanitary conditions of the food product and the environment in which it is produced. The results can be reported as CFU per unit of volume (milliliter, ml), weight (gram, g) or surface (square centimeter, cm2) depending on the type of sample tested. In the United States, Grade A milk must have a microbial load less than 100,000 CFU/ml for raw and must be below 20,000 CFU/ml after pasteurization. In addition, the PMO also includes specifications for bacterial loads of single-service containers and closures.

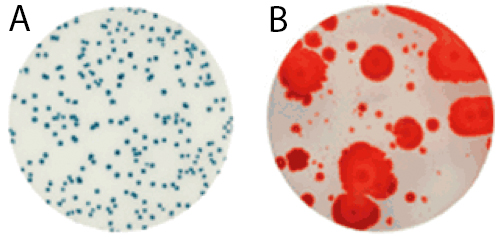

For raw milk, the time to results and the ease of reading makes the Petrifilm Rapid Aerobic Count Plate an ideal testing tool. Launched in early 2015, it harbors a dual-sensing indicator technology that yields blue/green and red colonies to facilitate enumeration. In addition, this plate was engineered with technology that resists distortion often caused by spreader colonies (see Figure 1).

Coliform Counts Too

Visualizing the microorganisms identified with the standard plate count is not enough. More testing is needed to suggest the presence of fecal contamination. The term “fecal coliforms” is used to describe a sub-group of coliforms such as E. coli and Klebsiella spp that are differentiated by their ability to ferment lactose at high temperatures.3 Enumeration of E. coli is arguably the best routine indicator of fecal contamination. E. coli is rarely found growing in environments outside the intestine, although they are able to survive, and their enumeration is relatively simple and rapid. While the PMO has no coliform standard for raw milk, it states that the coliform count in pasteurized milk should not exceed 10 CFU/ml.

The Importance of a Comprehensive Safety and Quality Strategy

As discussion of raw milk expands and is promoted by several groups, the safety of the product becomes a more generalized issue. Similar to other ready-to-eat products, safe raw milk consumption depends on integrated systems that assess, monitor, validate and verify the process and environment in which processing, storing and distributing occurs. Yet unlike other ready-to-eat products, or pasteurized milk for that matter, raw milk lacks any intervention to reduce the microbial load present. Therefore, monitoring of this microbial load in product and environment is an important aspect of quality and safety.

However, it is important to understand that any kind of microbial testing is only significant when it is part of an integral, comprehensive safety and quality strategy. Test results alone cannot replace good practices or interventions designed to reduce microbial loads to acceptable levels were the occurrence of foodborne pathogens is less likely.

References

- Centers for Disease Control and Prevention. (2007). Foodborne Active Surveillance Network (FoodNet) Population Survey Atlas of Exposures, 2006-2007. Atlanta: U.S. Department of Health and Human Services, Centers for Disease Control and Prevention.

- Center for Disease Control and Prevention. “Raw Milk Questions and Answers”. Accessed March 2015. Retrieved from: http://www.cdc.gov/foodsafety/rawmilk/raw-milk-questions-and-answers.html.

- Eijkman, C. (1904). Die garungsprobe bei 46° als hilfsmittel bei der trinkwasseruntersuchung. Zentr. Bakteriol. Parasitenk. Abt. I. Orig. 37:742.

- Feng, P., Weagant, S.D., Grant, M.A., Burkhardt, W. (1998). Bacteriological Analytical Manual, 8th Edition, Revision A. Chapter 4 BAM: Enumeration of Escherichia coli and the Coliform Bacteria. Accessed March 2015. Retrieved from: http://www.fda.gov/Food/FoodScienceResearch/LaboratoryMethods/ucm064948.htm.

- Food and Drug Administration. (2012). Bad Bug Book, Foodborne Pathogenic Microorganisms and Natural Toxins.

- Langer, A.J., Ayers, T., Grass, J., Lynch, M., Angulo, F.J., Mahon, B.E. (2012). Nonpasteurized dairy products, disease outbreaks, and state laws—United States, 1993–2006. Emerg Infect Dis. 18(3):385–391.

- Oliver, S.P., Boor, K.J., Murphy, S.C., Murinda, S.E. (2009). Food safety hazards associated with consumption of raw milk. Foodborne Pathog Dis. 6:793-806.

- U.S. Department of Agriculture. “Milk production”. Accessed March 2015. Retrieved from: www.nass.usda.gov.

- U.S. Department of Health and Human Services, Public Health Service, Food and Drug Administration. Grade ‘‘A’’ Pasteurized Milk Ordinance, 2009 Revision.